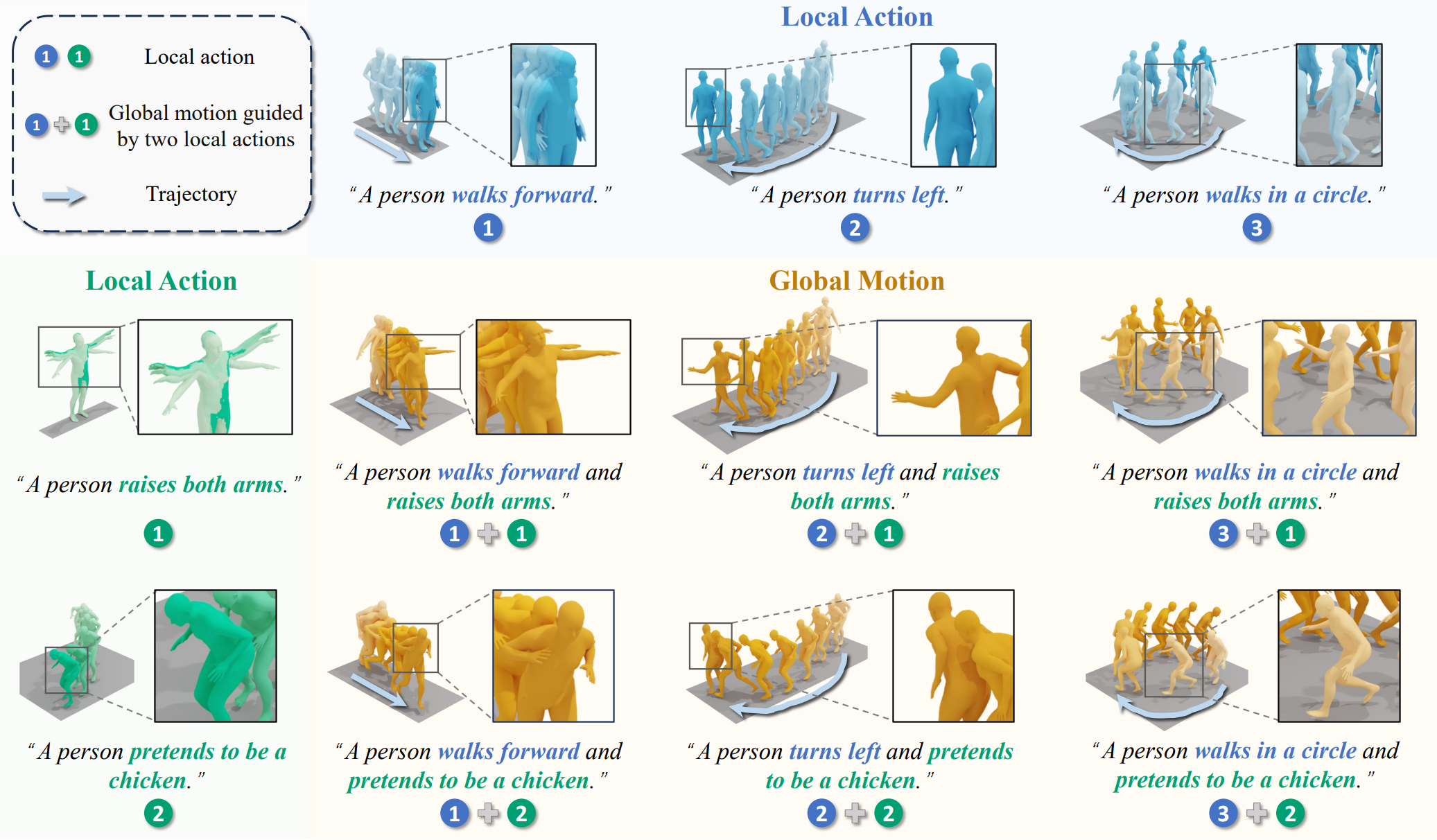

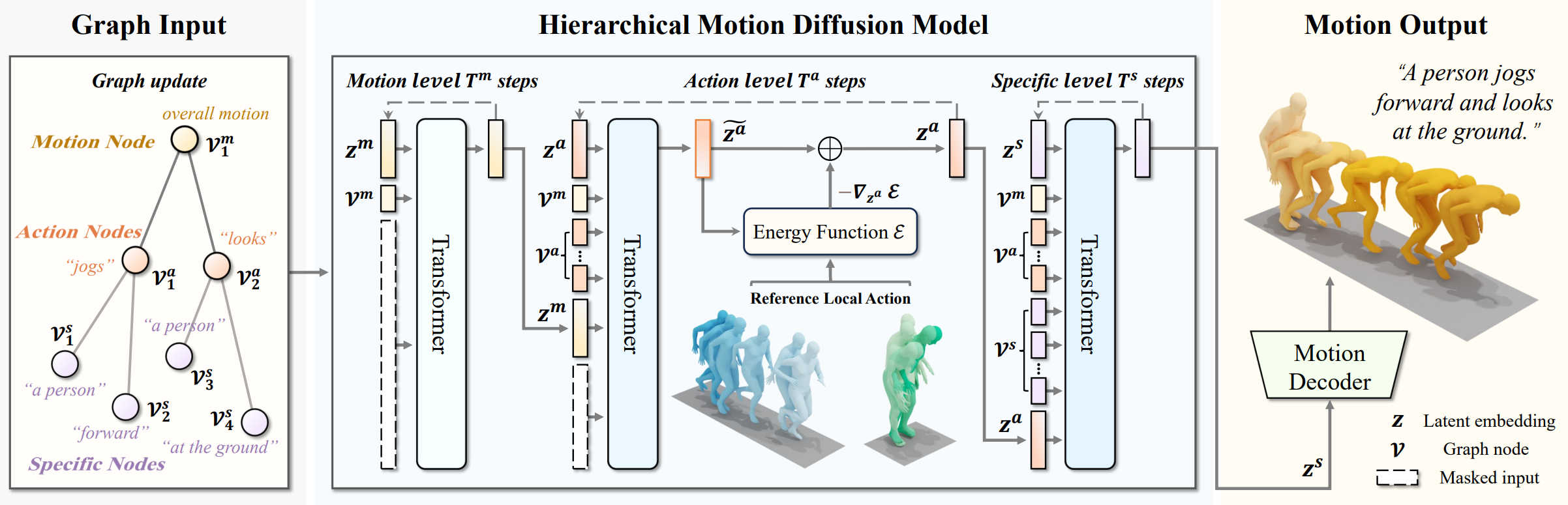

Text-to-motion generation requires not only grounding local actions in language but also seamlessly blending these individual actions to synthesize diverse and realistic global motions. However, existing motion generation methods primarily focus on the direct synthesis of global motions while neglecting the importance of generating and controlling local actions. In this paper, we propose the local action-guided motion diffusion model, which facilitates global motion generation by utilizing local actions as fine-grained control signals. Specifically, we provide an automated method for reference local action sampling and leverage graph attention networks to assess the guiding weight of each local action in the overall motion synthesis. During the diffusion process for synthesizing global motion, we calculate the local-action gradient to provide conditional guidance. This local-to-global paradigm reduces the complexity associated with direct global motion generation and promotes motion diversity via sampling diverse actions as conditions. Extensive experiments on two human motion datasets, \ie, HumanML3D and KIT, demonstrate the effectiveness of our method. Furthermore, our method provides flexibility in seamlessly combining various local actions and continuous guiding weight adjustment, accommodating diverse user preferences, which may hold potential significance for the community.

In GuidedMotion, we provide an automatic local action sampling method, which deconstructs the original motion description into multiple local action descriptions and uses a text-to-motion model to generate the reference local actions. Subsequently, we leverage graph attention networks to estimate the guiding weight of each local motion in the overall motion synthesis. To enhance generation stability, we divide the motion diffusion process for synthesizing global motion into three stages:

@inproceedings{guidedmotion,

title={Local Action-Guided Motion Diffusion Model for Text-to-Motion Generation},

author={Jin, Peng and Li, Hao and Cheng, Zesen and Li, Kehan and Yu, Runyi and Liu, Chang and Ji, Xiangyang and Yuan, Li and Chen, jie},

booktitle={ECCV},

year={2024}

}